Ah, poor Google. So full of really smart people, so detached from reality. I say this with great respect for my many friends and colleagues who work there. Your fundamental inhumanity is your tragic flaw, and the thing that made you so good providing search is going to doom you in the social space.

Marie Antoinette Syndrome

As I mentioned, I know a a dozen or so people at Google. These are really smart guys. I would say the average IQ is around 135-145. That is great when you need to create complex distributed algorithms for a massive compute cluster. Not so great if you are trying to design something for ordinary humans.

|

| The IQ distribution curve (from Wikipedia) |

Think about this for a moment: The average IQ, by definition, is 100. An IQ of 145 is three standard deviations over norm, representing about 1% of the population. And most important for our discussion, the gap between a person with an IQ of 145 and a typical person is the same as between the average person on the street and someone with an IQ of 55. IQ of 55 would signify moderate retardation.

Did you know that someone with an IQ of 55 is capable of doing repetitive tasks like housework, mowing lawns, laundry, etc., but would have a hard time doing critical reasoning tasks like working on a farm, harvesting crops, fixing fences, etc.? It is interesting. For someone with an average IQ, both of these types of tasks seem trivially easy so it is very hard to keep these distinctions in mind when designing things.

This happens in the other traits as well, not just IQ. I read a lot of fiction, and I often read stories where one of the characters is supposed to be incredibly brilliant – a genius of one kind or another. Or they feature characters that are fabulously rich, or the leaders of power government agencies or corporations. Unfortunately the writers of these fictions tend not to have high IQ or massive bank accounts themselves, so they just cannot even imagine how high IQ people think or very wealthy people live. For instance, a recent book had a scene where the incredibly wealthy corporate head used his status and influence to cruise through a special lane at airport security. But an actual person like that would be more likely to have a corporate jet and not enter the public terminal at all. In this case, the idea that someone could have their own jet was just beyond the writer’s imagination. It is like watching the Big Bang Theory – the writers claim that Sheldon has an IQ of 187, but they cannot even imagine how smart a person with an IQ of 145 is, much less 187. (and of course that wouldn’t be nearly as funny…)

|

| The Big Bang Theory - from CBS |

So any trait of discrimination can have this same effect –IQ, beauty, emotional understanding, political power, money, etc. We might call this the Marie Antoinette syndrome after the (likely apocryphal) story of the former queen, upon hearing that the peasants have no bread to eat, suggested that they eat cake instead.

Google has the same issue. They think they are designing things that will be simple and easy for everyone to understand, but they don’t seem to be able to tell when they go off the reservation. When they were just doing search, it doesn’t matter. They can distill all of that brilliance into something super simple: a search box. But high IQ doesn’t mean high EQ. In fact, their EQ – emotional understanding – is probably just as low as their IQ is high. And in cases like social – where EQ is at the forefront – they simply can’t tell that what feels satisfying emotionally to them is far below the bar of the typical human. They just cannot feel outside the box.

Let them +1 instead

Case in point: where FaceBook has Like, Google has +1. To someone at Google, this makes perfect sense. After all, someone clicking “like” doesn’t really mean that they like the subject matter. It just means that they want to see it promoted. So if someone shares an article about some negative event like a convicted killer being released on a technicality, it feels a little weird to click “Like”. A thumbs-up icon has the same issue. I just have to assume that people understand that I don’t like the fact that the killer got off, I just want to spread the outrage. +1 avoids this connotation. It is a neutral vote. Besides (the google person would remind us), under the hood +1 is what all of these other things are doing anyway – incrementing an entry in some database table somewhere in the cloud.

|

| The +1 button, from Google+ |

The problem is that this voting is not an emotion-free task. People click the Like or Thumbs Up button because they feel an emotion about the item. By moving this from an emotional indicator like “Like” to an unemotional one, they are diminishing this feeling. Furthermore, “+1” is a math equation, and math overall does not have a neutral emotional connotation. For most of the population, math has a negative connotation. So if my sister likes my llama photo, she has to overcome the emotional dissonance between this positive feeling of llama love and her negative feeling of math even to click this button.

|

| Crazy Llamas. I like them. I don't +1 them. |

I know my Google friends are rolling their eyes now. “Dave,” they are saying, “+1 is not a math equation! It has nothing to do with math! It is just the same as Like!” Sorry guys. The fact that these are the same to you just shows just how out of touch with the normal humans you are. No doubt you are still happy that you managed to shut down the people who wanted to add "-1", or "+0" as a refresh vote, or even have a currency (based on reputation) that allowed you a certain # of credits that you could add to or subtract from posts, etc. But let's be real here: If your buddy comes up to you and says “dude, I am getting married!” it would be fine to say “I like!”. It is fine to give him a thumbs up. But who in their right mind would say “+1”? No one can emotionally get behind that. It is only sensible in some dystopian future where Google has finally fulfilled their mission statement.

Google+ has a ton of awesome functionality. It isn’t a matter of features. But it is riddled with things like the +1 that are like constant reminder that it isn’t designed for normal people. It is covered with – let’s call them “emotional edges” – sharp areas that make the whole thing an unpleasant place to hang out. For instance – circles. Great idea - almost. But why do you draw them as perfect circles? The one thing that a social circle isn’t is perfect. You could have made them look a bit lumpy or hand-drawn or really ANYTHING but a geometric circle to indicate that you understand that circles are a fuzzy concept. And yet – you don’t. Again, a small point, but combined with the other hundreds and hundreds of small points, you get the overall emotional unpleasantness that is Google+.

|

| Google circles. Note the visual difference between friends and family. Um... |

|

| Here is what normal humans think when you say "friend circle". (via web image search) |

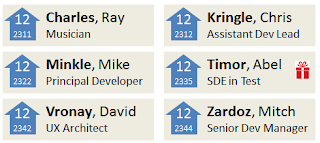

Social circles are interesting. Since the earliest days of computer-mediated social networking, researchers and designers have been trying to figure out how to capture social networks. On the one hand, we know that all of our “friends” are not created equal. Some are closer than others. Some are real friends, some are just co-workers, some are family. So it makes sense to categorize them. The downside is that for many reasons, when it comes to each individual person making a categorization is quite difficult! Computer scientists tend to view this as a technical issue and add features like the ability to put people in multiple circles or define a hierarchy of groups, etc., but the real problems are all emotional.

Adding someone to a circle is a form of labeling, and labeling another person is an emotional act. Is this guy just a co-worker or is he a friend? If I put him in co-workers, I am implicitly saying he is not a friend. I can overcome this emotion, of course. I can override this feeling and tell myself that these labels are not real, that they are just for convenience in distributing relevant social notifications, but that in itself takes emotional energy. This is actually one of the most underappreciated aspects of UX design – the energy that it takes to suppress the natural emotional response to a product.

There is a little equation I use to test the emotions of my designs:

The amount I care about something

|

=

|

The amount other people think I care about it

|

=

|

The amount of work I put into it

|

Read that equal sign as "should equal", and if you designed it right, the equation should be true. In other words, if I really like something, I should be able to spend a ton of time on it. And anyone who sees the end product should know I really cared about it. And if I don’t care about something, I shouldn’t have to spend any time on it at all, and anyone watching should know I don’t care.

|

| Greeting cards are a good match for different emotional expressions |

I call this the greeting card equation, because paper greeting cards solve this perfectly and computer greeting cards totally fail at it. Consider: if I really like you, I can go to the store and spend a ton of time finding the perfect card for your birthday. When I give it to you, you can tell the card is perfect and you know I must have spent a ton of time finding it. You might keep it by your desk for a few weeks to remind you of how special it is. On the other hand, if I am giving generic corporate cards to every client on their birthday, I just order some corporate card. I spend almost no time on it. When the client gets it, they are maybe happy that I bothered to remember their birthday but they know the card is not special. They don’t feel bad tossing it in the trash, and neither do I. Everything is in sync. But for online cards, this breaks down. I can’t really tell how much effort you put into the card, and because it is just in email, I really have no way to treat it special. The emotional connection has been removed.

Most software is terrible at this. Not only do I often spend too much time on things I don't care about, I often have no way of spending more time (in a meaningful way) on things I DO care about. Software tends to strip emotion out of the delivered artifact and make everything soulless. The exceptions here are things like certain games, like World of Warcraft, where I can immediately tell if another player has spent a ton of time on their character because I can see them wearing epic drops that can only come from months or years of play. Even a lot of social network sites only recognize and reward activity, even though a user might spend hours and hours on the site reading things without commenting.

This emotional mismatch issues plays large in the next problem with circles – an even larger one - that stems from the fact that humans and our relationships are not stable. Someone who is my BFF today might be merely a friend tomorrow and a stranger in 5 years. There is a high entropy factor in social networks. New groups are forming all the time, and old groups die out. Creating a group or adding people to a group is driven by a positive emotion. I care about my new friend, or my new Guys Night Out group, so it feels appropriate to spend the energy it takes to create the group or add the person to my friend list. But then later – when the person is not my friend, or the Guys Night Out plan has run its course – I no longer care about it. And because I don’t care, I don’t want to spend any energy on it - not even the energy to remove it. In fact, spending the energy to delete the group might inadvertently make the other group members think I cared about it, and make them feel bad for letting the group die, etc.

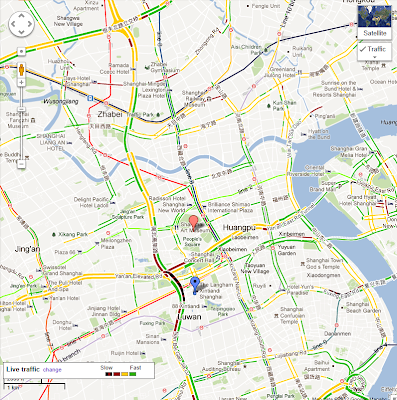

This is why social networking sites tend to decay over time. Because I never remove people, my Friendster or MySpace or Facebook account has a smaller and smaller percentage of meaningful relationships in it, and as a result it becomes less relevant over time. This is also why a new social network always feels somehow better than the last one. It has smarter people, more relevant conversations, etc. It is all because your social network in the new space has not had time to decay. This is a very difficult problem for computers to handle. In an ideal world, the computer would be able to infer your social groups for you based on your behavior. Unfortunately, Google, FaceBook, and the like do not have sufficient signals to make this determination accurately. At least not yet, and not in the near future. For most people, there is still way too much of life that happens outside of the digital realm for the computer to be usefully accurate about this except in very limited domains. The one big advantage that FaceBook has is that it was the first network to grab a significant set of older users, who have more stable social networks than teens or college students.

This brings us to the third issue. Humans are not computers. We are not always rational creatures. We do not think of our daily doings as activity feeds. Our social networks do not consist solely of people who use computers. In the late 90’s – long before Friendster and MySpace – I was working in

Lili Cheng’s Social Computing Group at Microsoft Research. We were doing research on social networks and had a strong belief that these would be incredibly popular in the future. As part of this,

Shelly Farnham and I went to a local mall and asked 100 or so random people to draw their “social network” on a blank piece of paper. This research was quite fascinating, and despite being cited hundreds of times in the literature, none of its major findings have been incorporated into any major commercial product.

|

| A user's hand-drawn social network |

Here are some of the not so intuitive findings:

· Every single person drew themselves in the center of the paper. Everyone sees themselves as the core of their social network, and they define others primarily based on the distance from themselves. And yet, none of the major social networks visualizes things this way.

· People put positive as well as negative groups in their social networks. It was very common to find groups like “enemies”, “competitors”, “people I don’t talk to”, and the like. And yet social network software only has positive circles.

· Most people either classify their networks as groups like “friends”, “family”, “co-workers”, or in terms of relationships, where there is a hierarchy of relationships that are directly connecting members of the network to each other, like mom->sister->nephew or boss->co-worker, etc. Very few people used both. To me, this indicates that there are different ways people think of human relationships. Most of the popular sites only support groups, not relationships.

· Most people put figures in their social network that they have never contacted and likely never will. Examples might be the boss of their company, a sports team, movie star, church leader, etc. These tend to serve the role of emotional anchors for a group or as connection points. However, these are not just labels – they are thought of as full members of the social network in the user’s mind. Ironically, most existing social networks actually work hard to prevent you from adding people to your network that you cannot connect with. They try to claim they are doing the community a service by limiting you to “real friends”, but actually this is just going against the natural emotionally flow.

· Related to the above, a surprising number of people have members of their social network that are not “contacts” in the traditional sense. Very common examples would be dead relatives, pets, fictional characters from a book or TV show, and God. Again, these sorts of entities are explicitly omitted from current networking sites.

So – some thoughts for all of you designing your social networking sites. I hope it is interesting for you, and look forward to seeing more humanity from you in the future.

PS: If you are interested in some alternative approaches to sharing and identity, try

Heard! Thx.